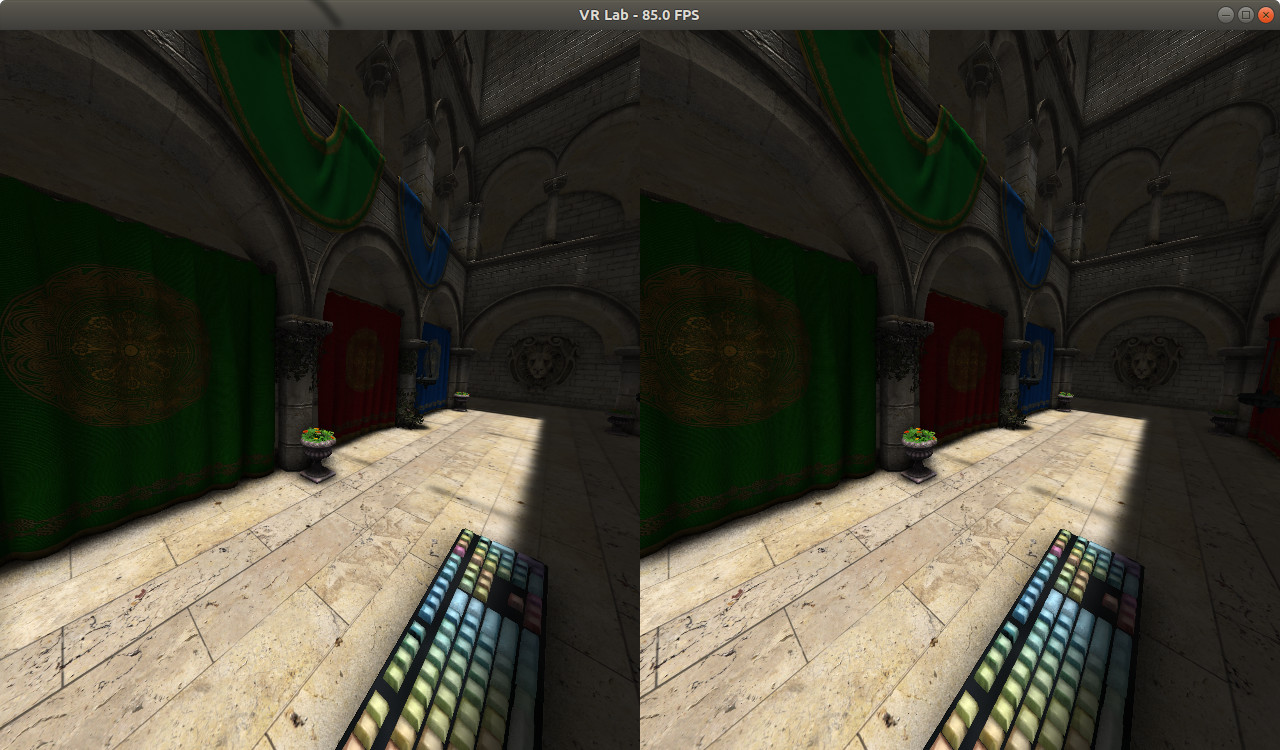

Clustered Light Shading VR Renderer

Real-time VR rendering with Rust and OpenGL

NOTE: This post is generated with AI (based on an actual project I did) to get some content here. I advise against reading it since it's aweful. A manual rewrite is pending...

Virtual reality rendering presents unique challenges that push the boundaries of real-time graphics. When I set out to build a VR-ready renderer, I knew I wanted to explore advanced lighting techniques while maintaining the performance necessary for comfortable VR experiences. This led me to develop a clustered light shading implementation using Rust and OpenGL.

The Challenge

Rendering complex scenes in VR requires maintaining high frame rates while rendering the same scene twice—once for each eye. Traditional forward rendering struggles with numerous dynamic lights, while deferred rendering has its own set of trade-offs. Clustered light shading offers an elegant middle ground, dividing the view frustum into clusters and efficiently determining which lights affect each cluster.

Technical Approach

The renderer is built entirely in Rust, leveraging the language's performance characteristics and memory safety guarantees. This proved particularly valuable when working with the complex state management required for OpenGL applications. The codebase integrates with SteamVR on Linux, enabling testing with actual VR hardware.

One interesting challenge was sphere generation for light volumes. Rather than using traditional lookup tables, I implemented iterative subdivision of platonic solids with arc-length interpolation. This approach provides more control over the tessellation and produces high-quality spheres without pre-computed data.

The rendering pipeline separates concerns into distinct passes, with careful attention to frame timing—critical for VR where even minor latency is immediately noticeable. The implementation includes hot shader reloading, which significantly speeds up iteration when tuning visual effects.

Performance Considerations

The current implementation makes approximately 8,000 OpenGL calls per frame for material management. While this demonstrates the rendering capabilities, it also highlights opportunities for optimization. Future improvements include implementing bindless textures and multi-draw commands, which would dramatically reduce the driver overhead.

The Sponza scene serves as the primary test environment, providing a good mix of geometric complexity and material variety. Rendering it in real-time with ambient occlusion while maintaining VR frame rates demonstrates the viability of the approach.

As a fun aside, the project also includes a virtual keyboard with realistic key pressure physics—a useful tool for exploring VR interaction paradigms.

What I Learned

Working at the intersection of systems programming and graphics has been incredibly rewarding. Rust's type system caught numerous bugs that would have been runtime errors in C++, particularly around resource lifetime management and thread safety. The ecosystem around Rust for graphics development has matured significantly, with solid bindings for OpenGL and VR APIs.

The project reinforced the importance of profiling and measurement in graphics programming. VR applications have strict performance budgets, and understanding where time is spent each frame is essential for optimization. Frame timing analysis tools built into the project proved invaluable for identifying bottlenecks.

Future Directions

The next major milestone is implementing the actual clustered light shading algorithm with support for multiple dynamic light sources. The current architecture provides a solid foundation for this work. Additional optimizations around bindless rendering and reducing draw call overhead will help scale to more complex scenes.

Virtual reality continues to push the boundaries of real-time rendering, and exploring these techniques hands-on has deepened my understanding of modern graphics pipelines. The full source code is available on GitHub for anyone interested in exploring these concepts further.